Clive, one of our lead Solution Architects with a lifetime of delivering IBM FileNet solutions, continues his Journey to the Cloud – this time recalling his experience of deploying IBM’s Cloud Pak for Business Automation using a local registry and provisioning an Openshift cluster on local servers. To catch up on his previous blogs – here’s part 1, part 2 and part 3

ICP4BA deployment using a local registry and provisioning an Openshift cluster on local servers

This post has been a long time coming!

Just as I started before Christmas (that seems a while ago now), the issue around Log4j hit, and everyone, including IBM, was busy patching and testing systems for vulnerabilities. I decided to wait for things to quiet down and for statements from IBM about the containers that make up Cloud Pak for Business Automation. I also wanted to try something new to see if the lag of downloading all the containers as required was causing some of the timing issues that I believe caused deployment problems.

I’m going to step through the process of getting copies of the Cloud Pak containers to a local Docker repository and deploy a production environment.

– Please note that in the detail below, I have replaced the domain name, machine names and any other references with sample values.

Openshift Cluster Setup.

I started this exercise by creating a completely new 4.8 Openshift cluster using the Assisted Install from cloud.redhat.com. I created the base VMs, in our case 3 master nodes with 16GB RAM, 300GB HDD and 8 virtual cores and 9 Worker Nodes with 32GB RAM, 300GB HDD and 8 virtual cores. I also set the DHCP server to issue static IPs to the 12 machines and all with a name in a local domain (os.local) as the cluster will only be available inside the company network. Two further static IPs were added to the DNS one for the api (api.cl.os.local) and a wildcard for the deployed apps (*.apps.cl.os.local).

A further Linux server was created to allow for cluster management. This server was used to create the ssh key for the Openshift cluster during the setup on cloud.redhat.com. Once the cluster had been configured, the iso for the install was created, downloaded and each VM started with the iso as the boot disk. A few clicks later…and an hour or so…and the cluster was up and running, ready to go.

Now, finishing the pre-reqs for the deployment.

This involves creating an NFS server, used for the shared storage class, and the command line tools needed to control the Openshift cluster (OC, Podman, skopeo and copying the kubeconfig). Once I was able to login to the Openshift cluster, I created a new Storage Class using the NFS Managed Client

oc process -f https://raw.githubusercontent.com/openshift-examples/external-storage-nfs-client/main/openshift-template-nfs-client-provisioner.yaml \

-p NFS_SERVER=nfs.os.local \

-p NFS_PATH=/osnfs | oc apply -f –

and changed the Openshift Image Registry to use this

oc edit configs.imageregistry.operator.openshift.io

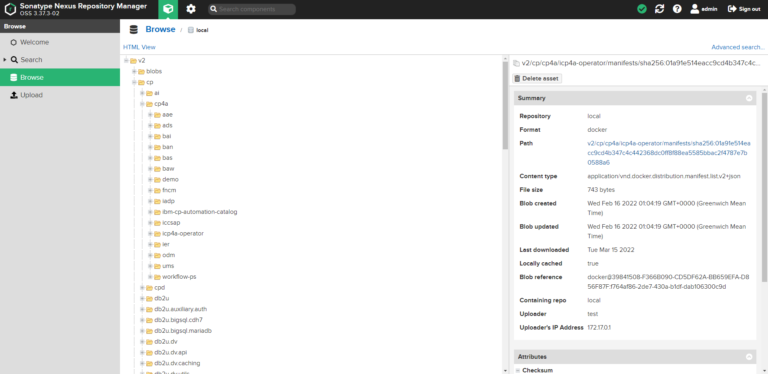

Finally, I created a further Linux server running Docker and deployed Nexus3 as a local Docker repository.

Mirroring Containers

Mirroring the containers is well documented on the IBM Knowledge Center (Preparing an air gap environment – IBM Documentation) so I’m going to describe the commands I used.

I used the server created earlier with all the command lines installed. I created a env.sh file with the following values:

NAMESPACE=demo

CASE_NAME=ibm-cp-automation

CASE_VERSION=3.2.4

CASE_INVENTORY_SETUP=cp4aOperatorSetup

CASE_ARCHIVE=${CASE_NAME}-${CASE_VERSION}.tgz

OFFLINEDIR=~/offline

CASE_REPO_PATH=https://github.com/IBM/cloud-pak/raw/master/repo/caseCASE_LOCAL_PATH=${OFFLINEDIR}/${CASE_ARCHIVE}

SOURCE_REGISTRY=cp.icr.io

SOURCE_REGISTRY_USER=cp

SOURCE_REGISTRY_PASS=IBMCloudAccessTokenHere

LOCAL_REGISTRY_HOST=docker.os.local

LOCAL_REGISTRY_PORT=’:5000′

LOCAL_REGISTRY=${LOCAL_REGISTRY_HOST}${LOCAL_REGISTRY_PORT}

LOCAL_REGISTRY_USER=image-pull

LOCAL_REGISTRY_PASS=password

USE_SKOPEO=true

This means I can set these variables each session by just tyoing source env.sh

With the variables set and the Nexus 3 repository set as a local insecure-registry (http traffic only) I just ran through the following commands and waited (around 8 hours) for the containers to be copied locally.

cloudctl case save –repo ${CASE_REPO_PATH} –case ${CASE_NAME} –version ${CASE_VERSION} –outputdir ${OFFLINEDIR}

cloudctl case launch –case ${CASE_LOCAL_PATH} –inventory ${CASE_INVENTORY_SETUP} –action configure-creds-airgap –namespace ${NAMESPACE} –args “–registry ${SOURCE_REGISTRY} –user ${SOURCE_REGISTRY_USER} –pass ${SOURCE_REGISTRY_PASS}”

cloudctl case launch –case ${CASE_LOCAL_PATH} –inventory ${CASE_INVENTORY_SETUP} –action configure-creds-airgap –namespace ${NAMESPACE} –args “–registry ${LOCAL_REGISTRY} –user ${LOCAL_REGISTRY_USER} –pass ${LOCAL_REGISTRY_PASS}”

cloudctl case launch –case ${CASE_LOCAL_PATH} –inventory ${CASE_INVENTORY_SETUP} –action configure-cluster-airgap –namespace ${NAMESPACE} –args “–registry ${LOCAL_REGISTRY} –user ${LOCAL_REGISTRY_USER} –pass ${LOCAL_REGISTRY_PASS} –inputDir ${OFFLINEDIR}”

oc patch image.config.openshift.io/cluster –type=merge -p ‘{“spec”:{“registrySources”:{“insecureRegistries”:[“‘${LOCAL_REGISTRY}'”]}}}’

cloudctl case launch –case ${CASE_LOCAL_PATH} –inventory ${CASE_INVENTORY_SETUP} –action mirror-images –namespace ${NAMESPACE} –args “–registry ${LOCAL_REGISTRY} –user ${LOCAL_REGISTRY_USER} –pass ${LOCAL_REGISTRY_PASS} –inputDir ${OFFLINEDIR}”

Once the containers were copied locally, everything was set to deploy a system. Installing the catalogues and operators, again is documented but was just two commands

cloudctl case launch –case ${OFFLINEDIR}/${CASE_ARCHIVE} –inventory ${CASE_INVENTORY_SETUP} –action install-catalog –namespace ${NAMESPACE} –args “–registry ${LOCAL_REGISTRY} –inputDir ${OFFLINEDIR} –recursive”

cloudctl case launch –case ${OFFLINEDIR}/${CASE_ARCHIVE} –inventory ${CASE_INVENTORY_SETUP} –action install-operator –namespace ${NAMESPACE} –args “–registry ${LOCAL_REGISTRY} –inputDir ${OFFLINEDIR}”

Once the operators were loaded it was just a matter of:

- Create the database schemas

- Add the secrets with the database and LDAP credentials

- Create 2 PVC’s for the operator

- Copy the jdbc drivers to the operator-shared-pvc

- Apply the configured CR YAML based on the samples from the downloaded case file used for mirroring

Lessons learned and some tips

I started the process just after we returned from the Christmas break so Jan 4th 2022 and at this time I was not able to run the mirror scripts successfully. There was an issue that was corrected with iFix 4 and there are still issues with a catalog being requested but not available in the local repository. This hasn’t impacted my deployment as yet as it is a Postgresql catalog that I’ve not seen used.

It all seems very straight forward but there is a lot of waiting around, restarting commands and ensuring commands have been actioned correctly. More specifically, the mirror command sometimes timeouts which requires a restart and a wait for it to validate the containers have been previously loaded. It took eight hours for the final run-through of the mirroring command.

Running the configure-cluster-airgap forces an update on each node, which marks the node as unavailable, drains the node and restarts the VM. This could be an issue on a Production Cluster.

Running the mirror as a user other than root, causes a permission issue, so I had to change the permissions on the on the /var/tmp folder.

The documentation says 200GB of disk space; I’m using 638GB.

If the mirroring shows nothing to do and the script hasn’t reported successful completion (usually after a ctrl c delete all the files from the /tmp folder and ~/offline/Docker.os.local (where Docker.os.local is the local repository servername or ip address defined in the env.sh file)

I’ve also tried this with a jFrog Artifactory local repository. The mirroring is successful but to deploy, Anonymous access must be enabled for the repository. My eval period terminated before I was able to deploy a system.

Useful Commands to check settings on the cluster

oc describe imageContentSourcePolicy/ibm-cp-automation

– this shows that the repository pulls will go to your local repository.

oc get secret pull-secret -n openshift-config –output=”jsonpath={.data.\.dockerconfigjson}” | base64 –decode

– this shows the connection to the local repository has been configured

oc delete catalogsource cloud-native-postgresql-catalog -n openshift-marketplace

– I used this to remove the Postgresql catalog and stop the issue with the image pull error

Next time

Next time, in Clive’s Journey to the Cloud, he adds in some extra functions to the PostgreSQL base deployment and runs through some features and functions.